From Tesla’s Optimus entering factories and leading the trend of humanoid robots in the workplace, to robots incorporating brain-computer interface technology for rehabilitation training, and then to the first personal robot Q1 of Shangwei Qiyuan, which opens up “secondary development” capabilities for scientific research, creative, and household users. If in the past few years, humanoid robots mostly remained in laboratories and on stage, now their application boundaries are expanding towards more continuous “operation” scenarios.

Shangwei Qiyuan Q1 personal robot, only 80cm tall, meets the “secondary creation” needs of scientific research, creative, and household users.

Data from market research firm IDC shows that the global humanoid robot market will enter an acceleration phase in 2025, with application demands mainly concentrated in areas such as cultural and entertainment performances, scientific research and education, data collection, guiding and shopping assistance, industrial manufacturing, and warehousing and logistics.

However, as robots move from prototypes to large-scale applications, an unavoidable real – world problem is emerging – data is becoming a bottleneck for the further evolution of embodied intelligence.

Scenarios are expanding, but data is far from keeping up

Similar to autonomous driving and general large models, embodied intelligence also highly depends on data – driven development. However, different from the former two, the data required by robots is not just about seeing and speaking, but a full – process record of contact, force application, collaboration, and failure in the real physical world.

The reality is that this kind of data is extremely scarce.

On the one hand, the cost of collecting real – world robot data is high and the cycle is long. Every grasping, inserting, moving, and two – arm collaboration means hardware wear, human input, and complex annotation. On the other hand, simply relying on public videos and simulation data makes it difficult to reflect the real operation intention from the first – person perspective, and it cannot cover key physical dimensions such as touch and contact force.

“If a robot only watches videos, it will never learn how to apply force.” A researcher in the field of embodied intelligence in Shanghai told a reporter from Science and Technology Innovation Board Daily bluntly. This also means that in the context of gradually approaching body performance and similar hardware solutions, whoever can accumulate high – quality, generalizable real – world interaction data first is more likely to gain the right to speak in the model and ecosystem in the next stage.

Against this background, collaborations around data, interfaces, and standards are increasing significantly. Recently, several robot enterprises and scientific research institutions in Shanghai have successively released or promoted the construction of data sets.

The National – Local Joint Innovation Center for Humanoid Robots also jointly released the world’s first large – scale cross – body visual – tactile multimodal data set – White Tiger – VTouch with Shanghai Weiti Technology Co., Ltd. This data set covers multimodal information such as visual – tactile sensor data, RGB – D data, and joint poses, and covers various body configurations such as wheel – arm robots, bipedal robots, and handheld terminals. The data scale exceeds 60,000 minutes, and it is regarded by the industry as one of the largest and most complete visual – tactile real – world interaction data sets globally.

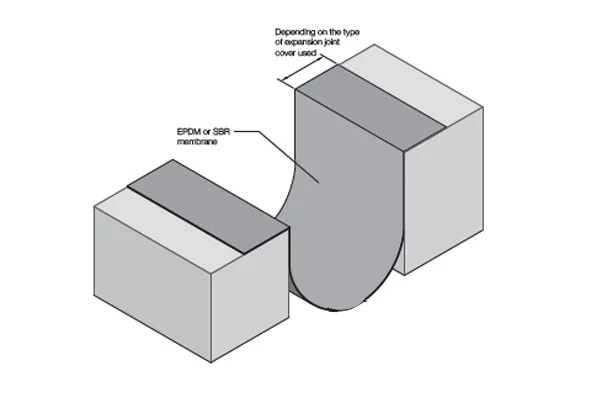

In the training ground of the National – Local Joint Innovation Center for Humanoid Robots (hereinafter referred to as the “National – Local Center”), multiple robots are simultaneously performing various real – world scenario operation tasks every day.

Real – machine operation in typical scenarios at the National – Local Center training ground

According to the National – Local Center, different from the previous “single – task, manual collection” method, White Tiger – VTouch introduces the idea of “matrix – style” task construction, and conducts systematic design from three dimensions: two – arm collaborative structure, atomic operation type, and contact and tactile mode, covering four major types of scenarios such as household, industrial, catering, and special operations, with more than 380 task types.

“Bigger data is not always better”

Regarding the importance of data, when being interviewed by media such as Science and Technology Innovation Board Daily recently, Gu Jie, CEO of Fourier, gave a more cautious judgment.

Gu Jie said that data is crucial for the future generalization ability of robots, which has been repeatedly verified in the development of autonomous driving and early large models. However, he also emphasized that more data is not always better, and quality, structure, and source are equally important.

“Repeating a task a thousand times, or even ten thousand times, sometimes doesn’t have much value. What’s truly valuable is the ability to switch between different tasks and include the complete process of success and failure.” Gu Jie pointed out.

He further believes that robot data cannot rely solely on self – collection. A large number of public videos on the Internet can serve as a basis, but their limitation is the lack of a first – person perspective, making it difficult to reflect the real operation intention of humans. Therefore, a large amount of human body movement and operation data based on the first – person perspective is also needed and should be integrated with the body data collected by robots in the real environment.

In Fourier’s vision, an ideal data structure should consist of three parts: public videos as a large – scale base, first – person human interaction data as a core supplement, and then a small – batch but high – value real – world robot – collected data. Even though the proportion of the latter is not high, its absolute quantity may reach the hundreds of millions level in the future.

Fourier GR – 3 robot participates in hand – eye rehabilitation training

The competition around data is extending to a more fundamental level.

On the one hand, the continuous maturity of technologies such as multimodal sensors and visual – tactile fusion makes it possible to collect real – world physical interaction data. On the other hand, the demand for collaboration in data formats, annotation systems, and training standards is rising rapidly.

Recently, Kuppers and Tashii announced a strategic cooperation, clearly stating that they will jointly promote the construction of embodied data standards. Fourier also joined hands with several hospitals, universities, and scientific research institutions to launch the “Brain – Machine Embodied · Data Engine Joint Innovation Program”, attempting to break through the data closed – loop between brain – computer interfaces and embodied intelligence in scenarios such as rehabilitation.

This article is from the WeChat official account “Science and Technology Innovation Daily”, author: Zhang Yangyang, published by 36Kr with authorization.