This section first analyzed the product’s quality characteristics and selected criticalquality-related parameters with correlation coefficients greater than the set threshold based on the correlation coefficients of industrial product quality inspection results and quality-related parameters. Established the SMOTE-XGBoost quality prediction model and optimized the hyperparameters. Finally, the active control method for prediction.

Analysis of quality characteristics

In product quality issues,this paper abstracts the product processing process as a manufacturing processing unit and the process of changing the product quality state as process characteristic data of processing quality. Additionally, it analyzesthe process parameter data during equipment operation.

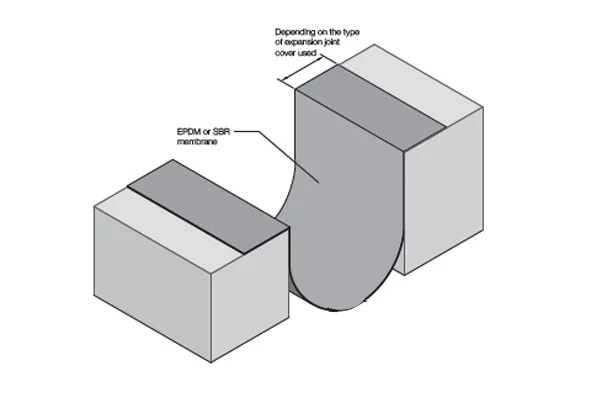

As shown in Fig. 5. In the manufacturing processing unit, \({X}_{i-1}\) represents the product state before the execution of the manufacturing processing unit;\({X}_{i}\) represents the product state after the execution of the manufacturing processing unit; From the perspective of quality data, \(M\_data\) refers to the resource processing data received by the manufacturing processing unit; \({D}_{i-1}\) represents the product quality state data before the manufacturing processing unit processes it; \({D}_{i-1}\) refers to the output product quality state data processed by the manufacturing processing unit; \(\Delta Q\) represents the difference between the actual qualified rate of the calculated output product and the qualified rate of the industrial product containing the predicted results, and \(f\) is the threshold. When the \(\Delta Q\) value exceeds a certain threshold, the edge computing layer will generate corresponding process adjustment control instructions \(h\) ,and send them to the relevant processing equipment, such as adjusting the spindle speed and feed rate15.

Manufacturing processing unit.

From the perspective of task execution, process \(i\) refers to the process of transforming the quality characteristics of a product from state \({X}_{i-1}\) to state \({X}_{i}\) through a series of processing methods.

From the perspective of quality characteristics, the current quality characteristic \({X}_{i}\) is the result of the current process equipment processing the quality characteristic \({X}_{i-1}\) in the current environment15. The process of changing quality characteristics is the process of transforming input data into output data through its processing mechanism.

As the manufacturing processing continues, manufacturing quality-related parameter data is collected one by one at a fixed frequency. The type of equipment process data parameter set for collection is \(i\), and each set of equipment quality-related parameter data collected is represented by an array, as shown in Eq. (1).

$$M\_Data=\left({m}_{1},{m}_{2}\cdots {m}_{i}\right)$$

(1)

In Eq. (1), \(M\_data\) represents an array of equipment quality-related parameters collected at a certain moment. \({m}_{i}\) represents the \(k\)-th parameter of array \(M\_data\). As time passes and the processing progresses, more and more data is collected, forming a matrix of quality-related parameter data as shown in Eq. (2).

$$\begin{array}{c}M\_Data=\left|\begin{array}{cccc}{m}_{11}& {m}_{21}& \cdots & {m}_{b1}\\ {m}_{12}& {m}_{22}& \cdots & {m}_{b2}\\ {m}_{13}& {m}_{23}& \cdots & {m}_{b3}\\ \vdots & \vdots & & \vdots \\ {m}_{1a}& {m}_{2a}& {m}_{1a}& {m}_{ba}\end{array}\right|\end{array}$$

(2)

Selection of quality-related parameters

Throughout the production process of industrial goods, a large amount of data related to their quality is collected through the equipment perception layer, including quality inspection results and corresponding quality-related parameters. Including quality inspection results and corresponding quality-related parameters. Based on the quality inspection results and corresponding quality-related parameters, important rules for selecting quality-related parameters can be established, as described in section “Equipment process parameters”. This article selects the quality-related parameters that affect the indirectly dynamic equipment process data.

The selection rule of quality-related parameters mainly refers to selecting the key quality-related parameters with a correlation coefficient greater than a set threshold through the correlation analysis between the quality inspection results and the quality-related parameters in industrial product manufacturing. Formula for calculating the correlation coefficient \(I\left({X}_{i}\right)\) between the quality inspection results and the quality-related parameters in industrial product manufacturing is:

$$I\left({X}_{i}\right)={\omega }_{0}{I}_{0}\left({X}_{i},{Y}_{0}\right)+{\omega }_{1}{I}_{1}({X}_{i},{Y}_{1})$$

(3)

$${I}_{0}\left({X}_{i},{Y}_{0}\right)=\sum_{x\in {X}_{i}}p\left(x,{Y}_{0}\right)log\frac{p(x,{Y}_{0})}{p\left(x\right)p({Y}_{0})}$$

(4)

$${I}_{1}\left({X}_{i},{Y}_{1}\right)=\sum_{x\in {X}_{i}}p\left(x,{Y}_{1}\right)log\frac{p(x,{Y}_{1})}{p\left(x\right)p({Y}_{1})}$$

(5)

where \({X}_{i}\) represents the \(i\)-th quality-related parameter, \({Y}_{0}\) represents the number of nonconforming industrial product manufacturing quality inspection results, and \({{\text{Y}}}_{1}\) represents the number of conforming industrial product manufacturing quality inspection results.\(p(x,{Y}_{0})\) represents the joint distribution of \({X}_{i}\) and \({Y}_{0}.\) \(p(x,{Y}_{1})\) represents the joint distribution of \({X}_{i}\) and \({Y}_{1}\). \(p\left(x\right),p\left({Y}_{0}\right)\), and \(p\left({Y}_{1}\right)\) are the probability distributions of variables \({X}_{i}\), \({Y}_{0}\), and \({Y}_{1}\),respectively. \({\omega }_{0}\) and \({\omega }_{1}\) are adjustment coefficients for data imbalance, with a sum of 1, generally determined based on the quality of the data samples obtained.

According to the correlation coefficient \(I\left({X}_{i}\right)\) between the quality inspection results and the quality-related parameters in industrial product manufacturing, the importance of the features is sorted. Obtain a feature set \(C\), where \({m}_{n}\) represents the \(n\)-th feature value.

$$C=\left\{{m}_{a}{m}_{b}\cdots \right.\left.{m}_{n}\right\}$$

(6)

SMOTE-XGBoost algorithm for quality prediction

Data preprocessing based on SMOTE

According to the factory survey results, in the stable production line of brake discs, majority of the final quality is qualified, and only a small number of products have quality problems (unqualified products). The brake disc production line produces more than 1000 products per day, of which over 95% are qualified products. From a data mining perspective, this means that the input labels of the prediction model are imbalanced. Imbalanced label data is a common type of data that is widely present in various industrial fields.

This article adopts the Synthetic Minority Oversampling Technique (SMOTE) algorithm to address the issue of imbalanced data. The core idea of the algorithm is to perform interpolation on the minority class samples in the dataset based on the k-nearest neighbor rule (as shown in Fig. 6 below). Generating more minority class samples as a result39. As the production dataset of brake discs is imbalanced, SMOTE is used in this chapter to balance the dataset. The main steps of the algorithm are as follows:

SMOTE oversampling algorithm principles schematic.

The dataset of brake disc processing collected by the production line is an imbalanced dataset. Based on the number of minority class samples \({N}_{min}\) and majority class samples \({N}_{max}\), the required number of synthesized samples \(N\) is calculated:

$$N=\frac{{N}_{max}}{{N}_{min}}-1$$

(7)

For each unqualified product data sample (minority class) \({X}_{j}\), where \({X}_{j}{\in N}_{min}\). Select \(k\) nearest neighbors (\(k\) is usually set to 5) of the minority class sample \({X}_{j}\) randomly with the Euclidean distance as the measurement standard.

Assuming the selected neighboring point is \({X}_{K}\), the new synthetic sample point \({X}_{new}\) is generated according to the following formula.

$${X}_{new}={X}_{k}+rand\left(\mathrm{0,1}\right)\times \left({X}_{j}-{X}_{K}\right)$$

(8)

where \(rand\left(\mathrm{0,1}\right)\) represents a random number between 0 and 1. Generate \(N{*N}_{min}\) new minority class samples, merge them with the original data set to get a balanced data set. Then input them into XGboost for identification.

Predictive model based on XGboost

XGBoost is ensemble learning model framework based on gradient boosting algorithm, which was proposed by Dr. Tianqi Chen and his colleagues40. Compared with the traditional Gradient Based Decision Tree (GBDT), both are based on decision trees. However, XGboost effectively controls the complexity of the model and greatly reduces the variance of the model by using second-order Taylor expansion and adding regularization terms. The trained model is also simpler and more stable41.

Assuming that the input samples are \(\left\{\left({x}_{1}{y}_{1}\right),\left({x}_{2}{y}_{2}\right),\cdots ,\right.\left.\left({x}_{n}{y}_{n}\right)\right\}\), The output of the XGboost model can be represented as the sum of \(K\) weak learner outputs:

$${\widehat{y}}_{i}=\sum_{k=1}^{K}{f}_{k}\left({x}_{i}\right)$$

(9)

where \({f}_{k}\left({x}_{i}\right)\) represents the output of the \(k\)-th weak learner.

The model’s bias and variance determine the prediction accuracy of a model. The loss function represents the bias of the model, and to reduce the variance, a regularization term needs to be added to the objective function to prevent overfitting. The objective function comprises the model’s loss function \(L\) and a regularization term \(\Omega \) to suppress model complexity. The objective function to minimize in function space is:

$$L=\sum_{i}l\left({y}_{i}{\widehat{,y}}_{i}\right)+\sum_{k}\Omega \left({f}_{k}\right)$$

(10)

$$\Omega \left({f}_{k}\right)=\gamma T+\frac{1}{2}\lambda {\parallel \omega \parallel }^{2}$$

(11)

Here, \(L\) represents the loss function, \(\Omega \left({f}_{k}\right)\) represents the regularization function, \(T\) is the number of leaf nodes, and \(\omega \) is the weight value of leaf nodes. In the XGBoost model, most weak learns are based on Classification and Regression Trees (CART). Therefore, each round of optimization only focuses on the objective function of the \(t\)-th classification and regression tree based on the previous models.

$${\widehat{y}}_{i}^{\left(t\right)}={\widehat{y}}_{i}^{\left(t-1\right)}+{f}_{t}\left({x}_{i}\right)$$

(12)

$${L}^{\left(t\right)}=\sum_{i}^{n}l\left({y}_{i ,}{\widehat{y}}_{i}^{\left(t-1\right)}+{f}_{t}\left({x}_{i}\right)\right)+\Omega \left({f}_{t}\right)$$

(13)

Next, perform second-order Taylor expansion on the loss function of XGboos:

$${L}^{\left(t\right)}=\sum_{i}^{n}l\left[{{\text{g}}}_{i}{f}_{t}\left({x}_{i}\right)+\frac{1}{2}{h}_{i}{f}_{t}^{2}\left({x}_{i}\right)\right]\left({y}_{i ,}{\widehat{y}}_{i}^{\left(t-1\right)}+{f}_{t}\left({x}_{i}\right)\right)+\Omega \left({f}_{t}\right)$$

(14)

And in the above equation:

$${{\text{g}}}_{i}={\partial }_{{\widehat{y}}^{\left(t-1\right)}}l\left({y}_{i ,}{\widehat{y}}_{i}^{\left(t-1\right)}\right)$$

(15)

$${h}_{i}={\partial }_{{\widehat{y}}^{\left(t-1\right)}}^{2}l\left({y}_{i ,}{\widehat{y}}_{i}^{\left(t-1\right)}\right)$$

(16)

In which, \({{\text{g}}}_{i}\) and \({h}_{i}\) are the first-order and second-order derivatives of each sample on the loss function, respectively. Therefore, the optimization of the objective function can be transformed into the process of finding the minimum value of a quadratic function.

SMOTE-XGBoost with jointly optimized hyperparameters

(1) SMOTE-XGBoost model

The hyperparameter optimization methods mainly include grid search, random search, heuristic algorithms, and so on42. This article used the gridsearch method to optimize the above three hyperparameters, in order to obtain the optimal predictive model.

The Smote algorithm and the XGboost algorithm both have hyperparameters that need to be set before training the algorithm. The setting of hyperparameters affects the performance of predictive models. Previous research has mainly focused on the hyperparameters in classification or regression models. Therefore, consider Smote and XGboost as a whole and propose a joint optimization method for hyperparameters, called SMOTE-XGboost, to improve the performance of quality prediction models. Specifically, this paper focuses on the optimization of the hyperparameters \(k\) in SMOTE (Number of nearest neighbors for selecting samples), \(e\) in XGboost (Number of decision trees), and \(T\) in XGboost (Number of leaf nodes). Selecting the maximum \({\text{AUC}}\) score as the optimization objective to obtain the best hyperparameters. The principle of joint hyperparameter optimization is as follows: Train the original SMOTE-XGboost model on historical data, which can be represented as:

$$M=SMOTE-XGboost(k,e,{\text{T}})$$

(17)

where \(k\) represents the number of nearest neighbors selected in SMOTE. \(e\) represents the number of decision trees in XGBoost, and \(T\) represents the number of leaf nodes in XGBoost. Training process of the SMOTE-XGboost prediction model described in this article includes: To optimize the hyperparameters of the SMOTE-XGboost model with the goal of obtaining the maximum AUC score, the following formula is used:

$$ \begin{aligned} f = & \max \left[ {\mathop \sum \limits_{i = 1}^{t} L\left( {y_{i} ,\hat{y}_{i} } \right)} \right] \\ = & {\text{max}}\left\{ {\mathop \sum \limits_{i = 1}^{t} L\left[ {y_{i} ,SMOTE – XGboost(k,e,T|D_{1:t} )} \right]} \right\} \\ = & G(k,e,T|D_{1:t} ) \\ \end{aligned} $$

(18)

In the expression:\({y}_{i}\) represents the true quality result; \({\widehat{y}}_{i}\) represents the predicted quality result; \(\left[{y}_{i},SMOTE-XGboost(k,e,T|{D}_{1:t})\right]\) represents a quality prediction function; \(G(k,e,T|{D}_{1:t})\) is a non-analytic function of the decision variable \(k,e,T\). \({\text{L}}\) is the \({\text{AUC}}\) scoring formula; \({D}_{1:t}\) represents the first \(t\) data points in the test set.

(2) Model evaluation indicators

To effectively evaluate the reliability of predictive models, comparative experiments of different algorithms are conducted using the coefficient of determination (\({R}^{2}\)) and the AUC as evaluation metrics to assess the relationship between predicted values and true values of the models. AUC is defined as the area enclosed by the coordinate axis under the ROC curve. It is a comprehensive performance classification indicator, which is commonly used to measure classification performance31,43. The higher the AUC, the better the algorithm performance.

Scoring formula for \({R}^{2}\):

$${R}^{2}=1-\frac{{\sum }_{i=1}^{N}\left({y}_{i},{\widehat{y}}_{i}\right)}{{\sum }_{i=1}^{N}{\left({y}_{i},\overline{y }\right)}^{2}}$$

(18)

In this expression, \({y}_{i}\) represents the true value, \({\widehat{y}}_{i}\) represents the predicted value, \(\overline{y }\) represents the sample mean, and \(N\) represents the sample size. A higher \({R}^{2}\) value indicates better performance. When the predictive model makes no errors, \({R}^{2}\) achieves the maximum value of 1.

Scoring formula for \({\text{AUC}}\):

$$AUC=\left[\sum_{i\in \,Positive\, sample\, se{\text{t}}}r(i)-\frac{M(M+1)}{2}\right]/M(N-M)$$

(20)

In this expression, \(r(i)\) represents the ranking number of positive samples in the data set, \(M\) represents the number of positive samples in the data set, and \(N\) represents the total number of samples in the data set.

(3) Active control methods

In the actual production process, manufacturing process data of industrial products is first transmitted to edge computing nodes through Ethernet. The edge computing nodes use important quality-related parameter selection rules to filter and reduce data, and make real-time quality predictions for products as qualified or non-qualified based on the quality active prediction model deployed on the edge computing nodes.

Active control methods refer to calculating the difference between the actual qualified rate of the produced product and the predicted qualified rate of products. If this difference is greater than a certain threshold, the edge computing layer will generate corresponding process adjustment control instructions and send them to the relevant processing equipment, such as adjusting spindle speed, feed rate, etc.

Edge computing-based proactive control method for industrial product manufacturing quality prediction, It characteristics lie in the calculation formula for the difference \(\Delta Q\) between the actual qualified rate of the produced product and the qualified rate of products with prediction results, which is as follows:

$$\Delta Q=\frac{{q}_{1}}{{Q}_{1}}-\frac{{q}_{2 }}{{Q}_{2}}$$

(21)

In the formula, \({q}_{1}\) and \({q}_{2}\) respectively represent the number of qualified products in the actual output, and the number of qualified products with prediction results; \({Q}_{1}\) and \({Q}_{2}\) respectively represent the total number of products in the actual output, and the total number of products with prediction results.